As DevOps practitioners ourselves, we know securing your AWS environments is complicated. Have you thought about approaching security the same way DevOps teams build and manage their AWS infrastructure? If not, then you should.

According to the 2021 State of DevOps report, when it comes to security, the highest performing organizations outperform the competition by integrating security into the end-to-end software delivery process.

But how do you integrate security into your DevOps workflow? You need to focus on two things:

- Gain an organizational understanding/agreement that DevOps has a shared responsibility of securing your org’s infrastructure.

- Implement Policy as Code to automate your security policies so it fits seamlessly into every stage of the software development lifecycle.

The power of DevOps

Many of us have been around long enough to remember the world of siloed IT organizations. In those times, teams had to adhere to a painful process of opening tickets in order to request infrastructure many months before needing it. Sadly, that infrastructure rarely arrived on time, nor did it meet spec! Today, the world looks very different.

DevOps owns infrastructure

Central IT and security no longer hold the keys to the kingdom. More and more, the DevOps team owns the provisioning of infrastructure. Today, DevOps practitioners can provision infrastructure and services on-demand as needed. Even better, teams can begin using new AWS infrastructure services or technologies on the same day they are released.

Of course, those shiny new AWS services come with a ton of security options. But the user must decide which security choices to make. As the saying goes, with great power comes great (shared) responsibility.

This agility has created its own set of problems. Teams are spinning up the latest infrastructure and services as fast as they can run terraform apply. Keeping track of infrastructure assets, controlling spending, and managing the ever-expanding configuration footprint have become pressing issues. Meanwhile, security teams work to keep the business safe, bridging this growing complexity while also combatting ever-more-sophisticated threats.

Who owns the solution?

Just as not all DevOps engineers are security experts (or even security-conscious), not all security experts are engineers versed in the world of automation and GitOps. More often than not, the DevOps role is a part of engineering or operations teams. They rarely report into the office of the CISO.

This organizational gap creates a problem. The security team may have a solution that provides observability into AWS infrastructure. They can see that it is not configured according to security best practices or policy. But infrastructure changes could impact availability, so these security teams are dependent on DevOps to ensure infrastructure and policy are aligned.

The solution? Buy a security product that allows the Security Team to block the DevOps Team any time they violate policy!!! Just kidding…that is a terrible idea.

A better solution …

Choosing security tools for AWS

When it comes to security tooling for AWS infrastructure and services, there is no shortage of options.

Native AWS security tooling

AWS itself comes with a myriad of tools and services. AWS Config, CloudTrail, GuardDuty, CodeBuild, and CodeDeploy are all designed to help businesses build and secure their AWS environments. On top of the native tooling in the platform, Amazon Marketplace has a vast expanse of third-party solutions.

Unfortunately, the majority of security solutions for AWS fall short of delivering value for companies practicing DevOps. This is because traditional security teams are top of mind when designing these tools rather than the DevOps teams who are building and automating the environments.

Tool friction

Rather than helping to address the security needs of the business, these solutions widen the gap between security and DevOps. When you add friction towards innovation, you cause pain for everyone, including your customers.

If you really want to improve your security posture in AWS, you must look for security solutions that empower the teams of people tasked with building and managing AWS…the DevOps teams.

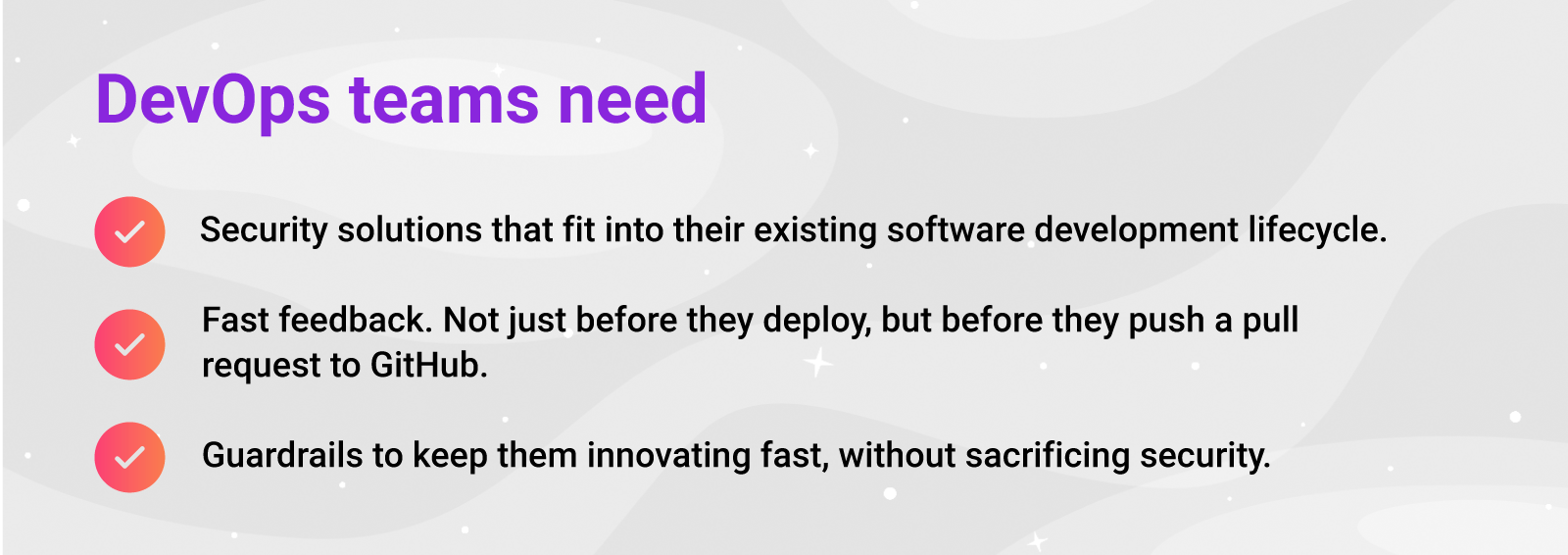

What security tooling do DevOps practitioners need?

First of all, let’s just get this out of the way…what DevOps doesn’t need is tooling that generates a bunch of security alerts that only get triggered after a deployment. Nor do they want tooling that requires logging in somewhere outside of their existing workflow.

As much as security is critical for modern technology businesses, innovation and bringing products to market are the top priority. DevOps teams measure their impact by how quickly they can bring innovation to market.

Test security during development

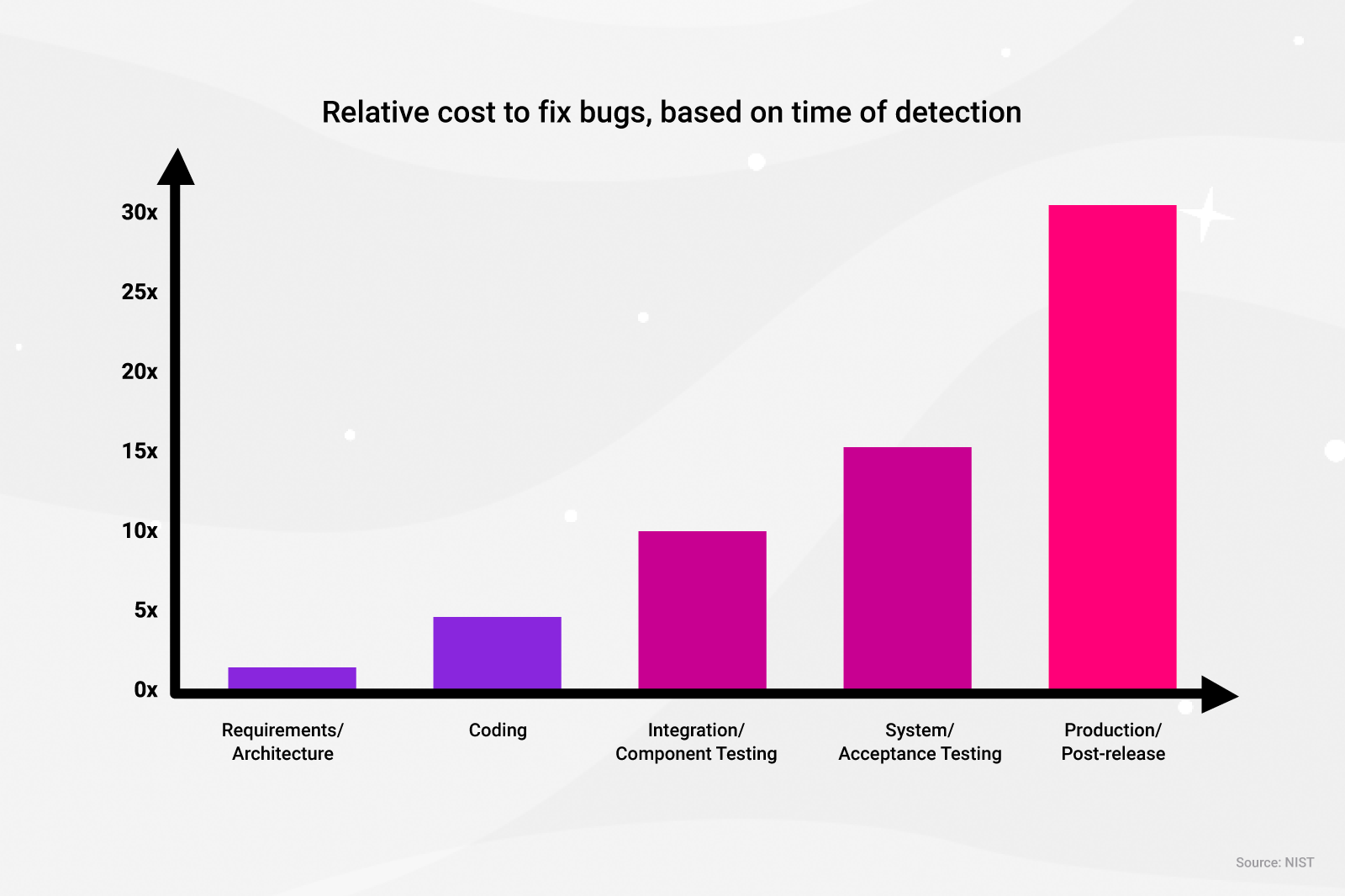

Change to AWS infrastructure starts on the DevOps engineer’s laptop. Doing so allows us to test security policies in this critical first stage. Early testing can have a profound and positive impact on your business.

The cost of fixing bugs increases exponentially the closer you move towards production. The longer you wait to address security misconfigurations the greater the risk to the business.

You need infrastructure security tests to run against changes as they flow through CI/CD pipelines. But it shouldn’t be the first time DevOps engineers receive feedback as to whether security tests will pass. Providing a DevOps engineer the ability to test AWS infrastructure and services according to policy while in the local development stage is what keeps innovation flowing.

A DevOps engineer developing Terraform code works in a local development environment such as Visual Studio Code to write and test automation code that manages all of the infrastructure and services for their AWS environment. This is the place where DevOps engineers need solutions to test that their infrastructure adheres to company policy.

You might be saying to yourself, “we can already ensure our infrastructure is configured correctly with Terraform, we just need to implement the security requirements.” This is a common misconception. To better understand this we need to explain the difference between configuration and policy.

Configuration and Policy are not the same

A big misconception is an idea that if you are using configuration management and/or infrastructure as code to automate your environments, that is sufficient evidence that your environments comply with company policies or standards.

Configuration management tools like Ansible, Chef, and Puppet, or Infrastructure as Code tools like Terraform, AWS CloudFormation, and Pulumi define the state that systems and infrastructure should be in. Usage of configuration management and infrastructure as code for automation is a fundamental tenant of high-performing DevOps teams.

We at Mondoo are big proponents of both configuration management and infrastructure as code and rely on both internally to automate our environments. That being said, these tools are not designed to address the needs of testing for compliance, security, and adherence to policies.

In the world of tech, policies define the rules that the business must follow, and the rationale for that policy. A business might choose to follow a given policy to pass a compliance audit such as SOC 2, PCI, or GDPR.

Your business may define a policy that all AWS infrastructure use a specific tagging pattern to help with tracking costs across business units. Still, policies that apply to ALL infrastructure in all circumstances are rare. You need flexibility when enforcing policies.

Customization and collaboration

Policies are nuanced, and often need exceptions for specific scenarios. For example, while best practices in AWS advise that an S3 bucket should not be publicly accessible, business requirements might arise that require you to configure an S3 bucket with public access.

This highlights the need for acceptable exceptions to the rule when defining policies. You need the ability to configure which policies to apply or not to apply under certain situations. This also highlights the need for collaboration and diverse representation across teams.

Policy requirements continuously change over time. Much like a codebase that is being collaboratively developed by software developers, policies need a review from others who may be affected by those policies. As with the S3 bucket example above, having a member of the DevOps team collaborate with security to document and define situations where it is acceptable to have a public S3 bucket and the process for requesting an exception helps to keep innovation flowing.

Static policies vs. software automation

Policy documents have traditionally been written and maintained using documentation systems such as a wiki, or document share. While these solutions are better than no documentation at all, they still fall short of fitting into the software development lifecycle of a DevOps engineer.

Using static documents for manual auditing and testing of policies is time-consuming and error-prone. Even if the auditing and testing are automated through the use of scripts, those scripts live separately from the document defining the policy, and you still have to collect and share results in a meaningful way. More often than not, rationalizing policy documents and testing that the policies are being followed are one-off exercises. It provides a snapshot in time rather than the observability into the constant change environments see.

Both security and DevOps teams need a solution that meets all of the requirements outlined above. They need a solution where the policy documentation and the automated tests reside in the same file. The policy should reside in source control as any other codebase allowing for tight collaboration between teams (technical and non-technical) to provide an audit record of who is making changes to the policies, as well as who reviewed, approved, and merged those changes.

The solution should allow anyone within the company to easily execute policies and run them at any stage of the software development lifecycle (development, build time, and run time). When those policies fail, the output should be clear and actionable.

Bridging the gap with Policy as Code

The concept and various incarnations of policy as code have been around for a number of years now. It refers to high-level code meant to manage and automate policy definition. The policies may be internal security controls for configuring infrastructure, a specific collection of controls set by compliance frameworks such as PCI, SOC 2, and HIPAA, or a set of best practices like CIS Benchmarks and AWS conformance packs.

With policy as code, each control is written as an automated attestation that when executed provides a simple true or false result. These policies are designed to run as part of a software development lifecycle as opposed to traditional computer security policies captured in a document meant to be evaluated by humans. Policy as code contains both the documentation and rationale for the policy, as well as the automated tests for making assertions on the assets they are validating.

Policy as code is not without its hurdles though. Many of the policy as code solutions on the market today require deploying and managing infrastructure, such as a server and agents to run those policies. Oftentimes teams must learn a new language before they can derive value from the solution. While the long-term benefits are measurable, most companies don’t have 12-24 months to wait for the return on investment.

Policy as Code with Mondoo

Rather than having to stand-up infrastructure, the Mondoo Platform is a SaaS solution. We provide an ever-increasing library of production-ready policies that you can use to scan your infrastructure. And you’ll receive your assessment in minutes. Not years, not weeks, not days…in minutes.

These policies include AWS-specific content such as our certified CIS Benchmarks for Amazon Linux, AWS Best Practice policies for EC2, S3, Serverless, storage. Additionally, we have policies as code to test AWS infrastructure you are managing with Terraform, as well as policies for testing Kubernetes clusters such as EKS, and the applications you are deploying to Kubernetes.

Different from other policy as code engines, you can execute Mondoo policies directly via cnspec, a light-weight binary that runs on Linux, Windows, and macOS, and can connect to remote targets including servers (Linux, Windows, macOS), cloud environments (AWS, GCP, Azure), and targets like Kubernetes clusters, networking equipment, and even other SaaS platforms and services.

What this means is that as a DevOps engineer, you can run Mondoo policies from your laptop, as part of a CI/CD pipe, or using our native AWS integration which runs continuously against your AWS accounts.

You and your team can assess the configuration of your AWS accounts today by signing up for a free Mondoo Platform account. The easiest way to get started is by following our getting started guide.

Integrate security into the DevOps workflow with Mondoo

Mondoo addresses the pain that businesses adopting DevOps feel when trying to integrate security into the process. We know from our years of experience working with companies who are on this journey that we needed to create a new way to implement and achieve continuous compliance.

This new way enables DevOps and Security Practitioners to address security assessments and observability in minutes. By continuously running your policies against every change, in any CI/CD workflow, on all of your environments, you will improve your organization's security posture and create a strong foundation against attacks.